How to find and fix slow drawing using Instruments

Layer blending, misaligned images, and more

Part 1 in a series of tutorials on Instruments:

- How to find and fix slow drawing using Instruments

- How to find and fix memory leaks using Instruments

- How to find and fix slow code using Instruments

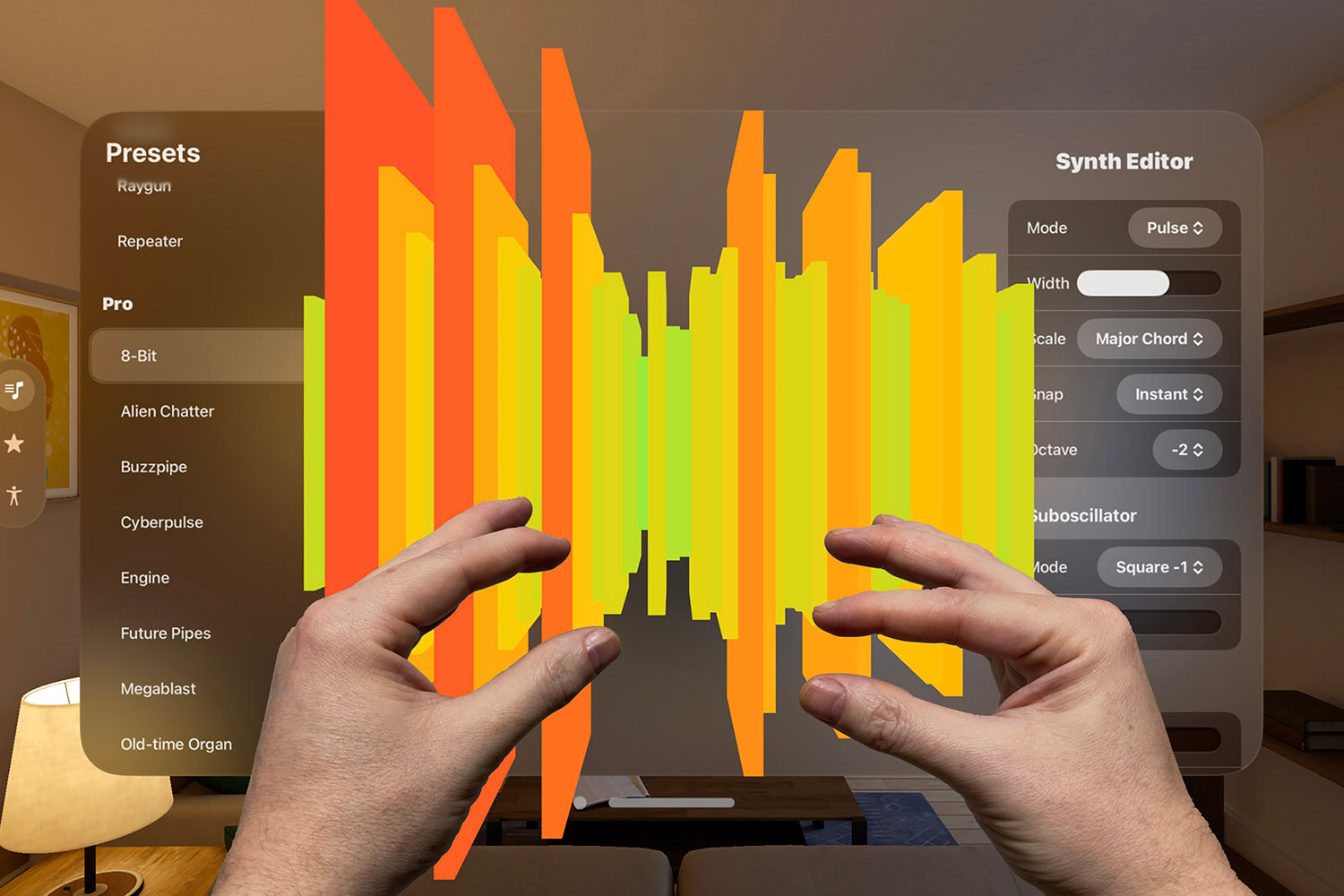

The very first iPhone rendered its display 60 times a second, which gives us just 0.0166 seconds to perform any app updates and render the current screen. Today, the iPad Pro goes even faster – the ProMotion 120Hz technology means our time slice has shrunk to just 0.0083 seconds, so the pressure for efficient drawing has never been greater.

Fortunately, Instruments comes with a range of tools to help us identify and resolve drawing issues, and I want to walk you through some of them using a real example. This is a topic I talk about a lot – see here for example – because it really matters, and every time I speak or write about it some folks learn it for the first time.

First, you need to download the sample project that I created for this tutorial. It’s available on GitHub right here, so please download that now.

Warning: That project is specifically written to be bad – please don’t use it as a learning exercise, other than as a way to find and fix problems using Instruments!

Go ahead and run the app on a real iPad, and try using it. It doesn’t do a great deal: you’ll see a selection of stories in a collection view, and tapping one brings up more details. If you leave it a few seconds you’ll see the story view scrolls gently so you can sit back and relax while reading the news, and there are buttons to dismiss the story positively or negatively.

Note: Although you can spot many issues in the simulator, it’s usually best to use a real device.

I have a 2017 iPad Pro, and this app doesn’t scroll well on that device – in fact, it jumps around a little while scrolling. There are a few reasons for this, and we’re going to look at each of them to see how Instruments can help us find problems and make performance better.

BUILD THE ULTIMATE PORTFOLIO APP Most Swift tutorials help you solve one specific problem, but in my Ultimate Portfolio App series I show you how to get all the best practices into a single app: architecture, testing, performance, accessibility, localization, project organization, and so much more, all while building a SwiftUI app that works on iOS, macOS and watchOS.

Sponsor Hacking with Swift and reach the world's largest Swift community!

Measuring the problem

Before you dive into making any changes, let’s first see what Instruments thinks of our app. Press Cmd+I to build and run the app using Instruments – this isn’t the same as the build you made previously, because Xcode builds for Instruments using release configuration so you get optimized code.

When Instruments launches you’ll be asked what instrument you want to use. In this tutorial we’ll be using the Core Animation instrument, so please select Core Animation and click Choose.

Instruments likes to show a lot of information at the same time, but for now all you need to do is click the record button in the top-left corner. This will ask Instruments to launch your app and monitor its performance.

This instrument has one main read out, which is the rate at which your app is drawing frames. In the ideal world this should be a solid 60 for most iOS devices and 120 for iPad Pro, but there are two catches:

- ProMotion actually uses a variable frame rate, so you might see 110 or some other in between number.

- If your app isn’t moving – if you aren’t scrolling around, you’ll see 0, because no drawing is happening. To get accurate figures you should scroll around quickly.

Once you have ten seconds or so of frame rate information, click the stop button in the top-left corner. This figure is our baseline, so it’s worth noting it down. On my iPad Pro I was getting a lot of variation, between 45 to 72 frames per second – nothing like the 120fps that iPad Pro is capable of.

Problem 1: Image blending

Every pixel on your device’s screen needs to be drawn to the screen – that much is inevitable. However, sometimes pixels need to blend two or more values, which is inefficient – iOS needs to do extra work to draw those pixels.

For example, if you have a white background and render a black UIView on top, that’s fast for iOS to do: it can just write the black color directly over the white color, as if the white didn’t exist. However, if the UIView has opacity below 100% – even 99% – then iOS needs to blend the black color with the existing white color, which is slower.

Blending layers is costly, so Instruments has a way to help us identify when it happens. To try it now, click Debug Options at the bottom of the Instruments window, then check the Color Blended Layers box in the popup that appears.

What you’ll see is that the collection view background is green, but the navigation bar and collection view cells are red. You might even notice that some parts – such as the text in the title bar – are rendered darker shades of red.

These colors represent blended layers, where green layers use no blending (and thus draw quickly) and red layers use blending (and thus draw slowly). The darker red shades are used to signify more blending.

This might be a surprise: all the images being provided are square and opaque. In fact, if you look at the project you’ll see they are all JPEGs – they literally can’t be transparent, because the JPEG format doesn’t allow it.

However, this app doesn’t just show images directly: it loads the image into RAM, draws a translucent box, then renders a headline on top. Even though the original image occupies the full space of the final rendered image, UIKit silently injects an alpha channel in the process.

Sure, that alpha channel reads 1.0 (completely opaque) for all pixels, but the very presence of that channel makes UIKit render the image with blending.

The fix for this is nice and easy. Here’s the way rendering starts in ViewController.swift:

let config = UIGraphicsImageRendererFormat()

let renderer = UIGraphicsImageRenderer(size: drawRect.size, format: config)To fix that, we just need to make the configuration opaque, like this:

let config = UIGraphicsImageRendererFormat()

config.opaque = true

let renderer = UIGraphicsImageRenderer(size: drawRect.size, format: config)Now UIKit won’t give the final image an alpha channel, so color blending won’t happen.

With that one tiny change, make sure you check in Instruments to see if blending has improved. All being well you should now see green across most of the screen, which is much better.

Problem 2: Misaligned images

If you render a 100x100 image into a 100x100 space, iOS can simply copy pixels from one place to another – it’s a 1:1 mapping, so takes no special computation. However, if your space has different dimensions – whether that’s 200x200 or even 100x101, then iOS needs to stretch the source image to fit the new space using a process of interpolation.

Just like with blended layers, Instruments can help us identify when images are misaligned in this way. Go back to the Debug Options menu in Instruments, uncheck Color Blended Layers and instead please check Color Misaligned Images. This should show all your collection view cells in yellow, with the rest of the screen white – Instruments’ way of showing us that our images are misaligned.

This time the problem is here:

let drawRect = CGRect(x: 0, y: 0, width: 300, height: 170)That hard codes the image rendering size to be 300x170. That might have been correct at some point, but if you check in the storyboard you’ll see the collection view cells are set to be 300x180 – a small but important difference.

Hard-coding numbers like this is rarely a good idea, so we’re going to have it calculated at runtime.

First, create this property inside the ViewController class:

var itemSize = CGSize.zeroThat will hold the correct item size based on what is actually used, rather than using fixed numbers.

We’re going to set that value as soon as the view controller is loaded, so please add this in viewDidLoad():

if let flowLayout = collectionViewLayout as? UICollectionViewFlowLayout {

itemSize = flowLayout.itemSize

}Finally, replace the existing let drawRect line with code to use the itemSize property we just stashed away:

let drawRect = CGRect(x: 0, y: 0, width: itemSize.width, height: itemSize.height)Once again, run that code through Instruments to verify that the problem is fixed – all the collection view cells should no longer be colored yellow. Progress!

Problem 3: Off-screen rendering

The last problem we’re going to look at it is off-screen image rendering. Ideally iOS wants to render pixels directly to the screen, because that’s the fastest and most efficient approach.

However, sometimes it isn’t always possible, and there are some things you can do that inadvertently cause iOS to require a second drawing pass – it will render your image off screen first for some processing, then render the finished product onto the actual display.

Off-screen rendering is slow, so Instruments helps us locate it. Go back to the Debug Options menu in Instruments, uncheck Color Misaligned Images and check Color Offscreen-Rendered Yellow instead – you should see our collection view cells glow yellow as a result.

This app hits a common problem because it applies shadows to each of the collection view cells. Shadows look great, and it’s awesome that CALayer has built-in functionality to make it happen, but it silently causes off-screen rendering to happen.

The shadow rendering code in Core Animation is really clever: it literally traces around the edge of your view to figure out where the shadow ought to be applied, which means you can add shadowing to text or other complex shapes.

This whole process needs to happen as its own drawing pass off screen: iOS will allocate some extra RAM, draw your content there, then use that to figure out how the shadow should be drawn. Once that’s complete, the finished product can be rendered to the main screen in a second pass.

This whole second drawing pass can be eliminated entirely by setting the shadowPath property of our layer. This forces Core Animation to use a pre-defined path for its shadow rather than calculating one automatically, which means there’s no need for a second drawing pass.

First, find this code in ViewController.swift:

cell.layer.shadowOpacity = 0.3

cell.layer.shadowOffset = CGSize(width: 5, height: 5)Now add this directly below:

let rect = CGRect(x: 0, y: 0, width: itemSize.width, height: itemSize.height)

let path = UIBezierPath(rect: rect).cgPath

cell.layer.shadowPath = pathOnce again, run the program again to make sure you’ve fixed the problem – if everything is working correctly the yellow should be gone.

Now what?

This app is still a long way from perfect, and we’ll come back to it again in part two: how to find and fix memory leaks using Instruments. However, at this point we’ve used Instruments to find several display performance issues: we’ve eliminated most of our blending, we’ve eliminated all misaligned images, and we calculate our shadows up front rather than having Core Animation do it for us.

At the same time, I hope you’re getting used to a fundamental principle of using Instruments: when you make a change – any change, even a small one – you should head back to Instruments to verify that your fix actually worked. If you try to make two or more changes at the same time, it’s possible one might help and one might not, but because you did them together it’s hard to tell which.

TAKE YOUR SKILLS TO THE NEXT LEVEL If you like Hacking with Swift, you'll love Hacking with Swift+ – it's my premium service where you can learn advanced Swift and SwiftUI, functional programming, algorithms, and more. Plus it comes with stacks of benefits, including monthly live streams, downloadable projects, a 20% discount on all books, and free gifts!

Sponsor Hacking with Swift and reach the world's largest Swift community!