What’s new in iOS 12?

Learn what developer features have changed and how to use them

iOS 12 brought with it huge leaps forward in machine learning, Siri shortcuts that let you integrate your app with iOS, new ways to work with text, alert grouping so that users are bothered less frequently, and more. Part of this comes through huge improvements to Xcode, but I'll be covering it all here.

In this article I'll walk you through the major changes, complete with code examples, so you can try it for yourself. Note: you will need macOS Mojave if you want to use the new Create ML tools.

- You might also want to read what’s new in Swift 4.2.

- I also published API diffs for iOS 12 here.

NEW: For lots of examples of iOS 12 in action, check out my book Practical iOS 12!

BUILD THE ULTIMATE PORTFOLIO APP Most Swift tutorials help you solve one specific problem, but in my Ultimate Portfolio App series I show you how to get all the best practices into a single app: architecture, testing, performance, accessibility, localization, project organization, and so much more, all while building a SwiftUI app that works on iOS, macOS and watchOS.

Sponsor Hacking with Swift and reach the world's largest Swift community!

Watch the video

If you prefer seeing these things like, I recorded a YouTube video showing of all the below and more.

Prefer reading instead? Then here we go…

Machine learning for image recognition

Machine learning (ML) was one of several major announcements from iOS 11, but it wasn't that easy to use – particularly for folks who hadn't studied the topic previously.

This is all changing now, because Apple introduced two new pieces of functionality. The first is Create ML, which is a macOS framework that's designed to make it trivial for anyone to create Core ML models to use in their app. The second – prediction batching – allows Core ML to evaluate many input sources in a more efficient way, making it less likely that newcomers would make basic mistakes.

Create ML has to seen to be believed. Although I suspect it will change a little as the Xcode 10 beta evolves – the current UI feels a bit last-minute, to be honest – it's already quite remarkable in its features, performance, and results.

If you want to try it out, first create a new macOS playground, then give it this code:

import CreateMLUI

let builder = MLImageClassifierBuilder()

builder.showInLiveView()Press the play button to run the code, then open the assistant editor to show the live view for Create ML. You should see "ImageClassifier" at the top, followed by "Drop Images To Begin Training" below.

Now we need some training data. If you just want to take it for a quick test run I've provided some images for you: click here to download them. These were all taken from https://unsplash.com/, and are available under a "do whatever you want" license,

If you'd rather create the data yourself, I think you'll be pleasantly surprised how easy it is:

- Create a new folder somewhere such as your desktop.

- Inside there, create two new folders: Training Data and Test Data.

- Inside each of those, create new folders for each thing you want to identify.

- Now drag your photos into the appropriate folders.

That's it! The training data is used to create your trained model, and the testing data is there just to see how well the trained model does with pictures it hasn't seen before.

Warning: Do not put the same image into both training data and test data.

As an example, n my example data, I've provided images of cats and dogs so we can use Create ML to train a model to distinguish the two. So, I have a folder structure like this:

- Training Data

- Training Data > Cat

- Training Data > Cat > SomeCat.jpg

- Training Data > Cat > AnotherCat.jpg

- Training Data > Dog

- Training Data > Dog > SomeDog.jpg

- Training Data > Dog > AnotherDog.jpg

- Testing Data > Cat

- Testing Data > Cat > AThirdCat.jpg

And so on. It's a good idea to have at least 10 images in each data set, and you should try to have a roughly equal number of images in each category. Apple suggests you allocate about 80% of your images to training data, leaving 20% for testing.

Now that you have your data ready, it's time to have Create ML start its training process. To do that, drag the whole Training Data folder into the playground assistant editor window, over where it says "Drop Images To Begin Training".

What you'll see now is Xcode flash through all the images repeatedly as it attempts to figure out what separates a cat from a dog visually. It takes under 10 seconds for me, and when it finishes you'll see "SUCCESS: Optimal Solution found", which means your model is ready.

The next step is to figure out how good the model is, and to do that we need to give it some test images. You'll see a new "Drop Images To Begin Testing" area has appeared, so drag the Testing Data folder into there. These are all images our model hasn't seen before, so it needs to figure out cat vs dog based on what it learned from our training data.

This testing process will take under a second to complete, but when it does you'll see a breakdown of how well Core ML performed. Obviously we're looking for 100% accuracy – and indeed that's precisely what we get with my cat/dog sample data – but if you don't reach that you might want to try adding more images for Create ML to learn from.

Once you're happy with your finished model, click the disclosure indicator to the right of "ImageClassifier" and provide a little metadata, then click Save to generate a Core ML model that you can then import to your app just like you would do with iOS 10.

Machine learning for text analysis

As well as being able to process images, Create ML is also capable of analyzing text. The process quite different in practice, but conceptually it's the same: provide some training data that teaches Create ML what various text "means", then provide some testing data that lets you evaluate how well the model performs.

Once again, I've provided some example training data so you can try it out yourself. This data comes from a paper called A Sentimental Education: Sentiment Analysis Using Subjectivity Summarization Based on Minimum Cuts by Bo Pang and Lillian Lee, published in the Proceedings of the ACL in 2004 – you can find out more here.

I've taken that data, and converted it into the format Create ML expects so it's ready to use. You can download it here.

If you'd rather create your own data, it's easy. First, create a new file called yourdata.json. For example, car-prices.json, or reviews.json. Second, give it content like this:

[

{

"text": "Swift is an awesome language",

"label": "positive"

},

{

"text": "Swift is much worse than Objective-C.",

"label": "negative"

},

{

"text": "I really hate Swift",

"label": "negative"

}

]The "text" field is whatever free-form text you want to train with, and the "label" field is what you consider that text to be. In the above example it marks reviews of Swift as being either positive or negative, but in my example data it uses thousands of movie reviews.

The next step is to create an MLDataTable from your data, which is just a structure that holds data ready to be processed. Once thats done, we can split the table in two parts so we have some training data and some testing data – again, the 80/20 rule is useful.

In code, it looks like this:

import CreateML

import Foundation

let data = try MLDataTable(contentsOf: URL(fileURLWithPath: "/Users/twostraws/Desktop/reviews.json"))

let (trainingData, testingData) = data.randomSplit(by: 0.8, seed: 5)You'll need to change "twostraws" to whatever your username is – I just used a path to the file on my desktop.

Now for the important: creating an MLTextClassifier from our data, telling it the name of the text column and label colum:

let classifier = try MLTextClassifier(trainingData: trainingData, textColumn: "text", labelColumn: "label")At this point you can go ahead and save the finished model, but it's usually a good idea to check your accuracy first just in case you have too little (or too much!) data.

This is done using by reading the number of classification errors that Create ML detected when it was doing its training. These will return a number between 0 (no errors) and 100 (nothing but errors):

let trainingErrorRate = classifier.trainingMetrics.classificationError * 100

let validationErrorRate = classifier.validationMetrics.classificationError * 100This next line will then pass the testing data through the same model to see how it fares:

let evaluationMetrics = classifier.evaluation(on: testingData)

let errorRate = evaluationMetrics.classificationError * 100Again, a value of 0 means no errors, which is what we ideally want.

Finally, we're ready to save. This takes two steps: create some metadata describing our model, then writing it to a URL something on our drive.

let metadata = MLModelMetadata(author: "Paul Hudson", shortDescription: "A model trained to handle sentiment analysis in movie reviews.", version: "1.0")

try classifier.write(to: URL(fileURLWithPath: "/Users/twostraws/Desktop/result.mlmodel"), metadata: metadata)Note: All these fancy Create ML tools are only available in Swift – a first from Apple, I think.

Grouped alerts

Notifications can now be grouped by the system, so that one conversation between friends doesn't end up occupying a meter's worth of screen scrolling.

Normally you'd create a notification like this:

func scheduleNotification() {

let center = UNUserNotificationCenter.current()

let content = UNMutableNotificationContent()

content.title = "Late wake up call"

content.body = "The early bird catches the worm, but the second mouse gets the cheese."

content.categoryIdentifier = "alarm"

content.sound = UNNotificationSound.default

let trigger = UNTimeIntervalNotificationTrigger(timeInterval: 5, repeats: false)

let request = UNNotificationRequest(identifier: UUID().uuidString, content: content, trigger: trigger)

center.add(request)

}This has changed in iOS 12 thanks to three new properties:

- The

threadIdentifierproperty describes what group this message belongs to. - The

summaryArgumentproperty lets you describe to the user what the message relates to – perhaps "from Andrew and Jill", for example. - The

summaryArgumentCountis used when each message relates to more than one thing. For example, if you got a message saying "you have five invites" then another saying "you have three invites", you have two messages but eight invites. So, you'd use 5 and 3 withsummaryArgumentCount.

If you don't provide a thread identifier, iOS will automatically group all your notifications together. However, if you do provide one then it will group your notifications by identifier: three from Andrew and Jill, four from Steven, two from your husband, and so on.

To try it out, we can create a simple loop:

for i in 1...10 {

let content = UNMutableNotificationContent()

content.title = "Late wake up call"

content.body = "The early bird catches the worm, but the second mouse gets the cheese."

content.categoryIdentifier = "alarm"

content.userInfo = ["customData": "fizzbuzz"]

content.sound = UNNotificationSound.default

content.threadIdentifier = "Andrew"

content.summaryArgument = "from Andrew"

let trigger = UNTimeIntervalNotificationTrigger(timeInterval: 5, repeats: false)

let request = UNNotificationRequest(identifier: UUID().uuidString, content: content, trigger: trigger)

center.add(request)

}That will create ten notifications, all grouped as "from Andrew".

There's one extra new option, which is the ability to create and show critical alerts – alerts that be delivered loudly even when the system is set to Do Not Disturb. Critical messages require approval from Apple, and I doubt they will hand out permission easily!

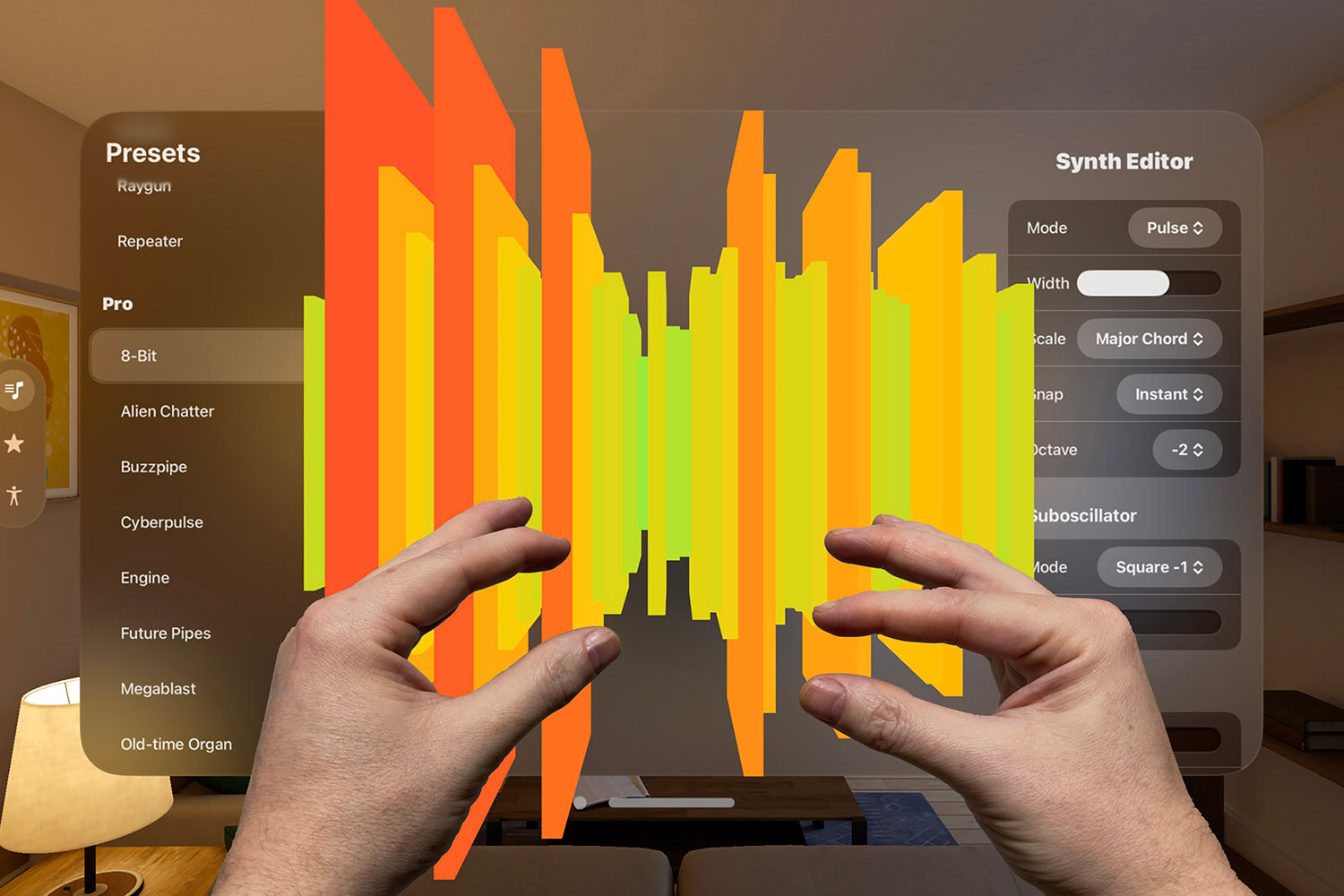

Shortcuts

iOS 12 introduces the ability for users to trigger actions in our app using Siri, regardless of what the action is.

Apple has given us two APIs to use, one very easy and one very difficult. I suspect most folks will go with the easy option to begin with and gauge user feedback – if there's enough usage they can then invest more time to implement the more complex solution, but there's no reason to go for that unless you do see good engagement.

If you want to try it out, most of the work is done using the same NSUserActivity class that does so much other work on iOS – Spotlight search, Handoff, and SiriKit. You can create as many of these as you need, attaching them to your view controllers as needed, however, you should only expose shortcuts that can be used at any time – Siri isn't able to understand when an activity shouldn't be available.

Note: At this time, shortcuts appear to work only on actual devices.

First, we need to tell iOS what activities we support. So, open your project's Info.plist file and add a new row called NSUserActivityTypes. Make it an array, then add a single item to it: com.hackingwithswift.example.showscores. This identifies one activity uniquely to iOS.

Now we need to register that activity when the user does something interesting. If you're just trying it out, try adding this in viewDidAppear():

// give our activity a unique ID

let activity = NSUserActivity(activityType: "com.hackingwithswift.example.showscores")

// give it a title that will be displayed to users

activity.title = "Show the latest scores"

// allow Siri to index this and use it for voice-matched queries

activity.isEligibleForSearch = true

activity.isEligibleForPrediction = true

// attach some example information that we can use when loading the app from this activity

activity.userInfo = ["message": "Important!"]

// give the activity a unique identifier so we can delete it later if we need to

activity.persistentIdentifier = NSUserActivityPersistentIdentifier("abc")

// make this activity active for the current view controller – this is what Siri will restore when the activity is triggered

self.userActivity = activityThat donates the activity to Siri – tells it what the user was doing so it can try to figure out a pattern. However, we still need to write some code that gets triggered when the action is selected. So, add this to your AppDelegate class:

func application(_ application: UIApplication, continue userActivity: NSUserActivity, restorationHandler: @escaping ([UIUserActivityRestoring]?) -> Void) -> Bool {

if userActivity.activityType == "com.hackingwithswift.example.showscores" {

print("Show scores...")

}

return true

}That's all our code done, so if you're using a real device you can run the app to register the shortcut, then add it to Siri. You can do this by going to the Settings app, then selecting Siri & Search. You'll see there any activities that have been registered by the system, and you can add any you please. You'll need to record a voice command for each, but that only takes a couple of seconds.

For shortcut testing purposes, there are two useful options you should enable. Both are in the Settings app, under Developer: Display Recent Shortcuts, and Display Donations On Lock Screen. These help you check exactly which donations were received by Siri, and will always show your shortcuts in the Siri search rather than whichever ones Siri recommends. Again, these only work on a real device for now.

Working with text

NSLinguisticTagger was fêted during last year's WWDC, because it was now powered by Core ML and able to perform all sorts of clever calculations at incredible speed.

Well, this year it's gone. Well, not gone – you can still use it if you want. But we now have a better solution called the Natural Language framework. This is, for all intents and purposes, a Swiftified version of NSLinguisticTagger: apart from being more Swifty, its API is almost identical to the old linguistic tagger code.

Let's start with a simple example. To recognize the language of any text string, large or small, use this:

import NaturalLanguage

let string = """

He thrusts his fists

Against the posts

And still insists

He sees the ghosts

"""

if let lang = NLLanguageRecognizer.dominantLanguage(for: string) {

if lang == .english {

print("It's English!")

} else {

print("It's something else...")

}

}As you can see, dominantLanguage(for:) returns an optional enum matching its best guess language.

There are a few things to keep in mind:

- It looks for the dominant language, so if you provide a text string with multiple languages then it will return the most important one.

- Sending in very small amounts of text will likely be problematic – without any context, Core ML can't make a good choice."

- The result is optional because it's possible no language will match your input string.

This feature was also possible using NSLinguisticTagger. Another feature that you might recognize is the ability to scan through a sentence, paragraph, or even entire document looking for tokens such as individual words.

For example, this code will load a string and break it up into individual words:

import Foundation

import NaturalLanguage

let string = """

He thrusts his fists

Against the posts

And still insists

He sees the ghosts

"""

let tokenizer = NLTokenizer(unit: .word)

tokenizer.string = string

let tokens = tokenizer.tokens(for: string.startIndex..<string.endIndex).map { string[$0] }If you'd prefer, you can also loop over each token by hand. This will pass a closure to you, and you need to return either true ("I want to carry on processing") or false ("I'm done").

Here's that in code:

tokenizer.enumerateTokens(in: string.startIndex ..< string.endIndex) { (range, attrs) -> Bool in

print(string[range])

print(attrs)

return true

}In case you were wondering, the "attrs" value describes the attributes of the token – was it a letter, a symbol, or an emoji, for example.

Another useful ability of Natural Language is that it can parse text looking for names of things. For example, this defines a simple text string and creates a name tagger to figure out what's inside:

let text = "Steve Jobs, Steve Wozniak, and Ronald Wayne founded Apple Inc in California."

let tagger = NLTagger(tagSchemes: [.nameType])

tagger.string = text

let options: NLTagger.Options = [.omitPunctuation, .omitWhitespace, .joinNames]

let tags: [NLTag] = [.personalName, .placeName, .organizationName]

tagger.enumerateTags(in: text.startIndex..<text.endIndex, unit: .word, scheme: .nameType, options: options) { tag, tokenRange in

if let tag = tag, tags.contains(tag) {

print("\(text[tokenRange]): \(tag.rawValue)")

}

return true

}As you can see, it asks Natural Language to look for an array of tags: people's name, any place names, and any organization name.

When it finishes, that should produce the following output:

Steve Jobs: PersonalName

Steve Wozniak: PersonalName

Ronald Wayne: PersonalName

Apple Inc: OrganizationName

California: PlaceNameIf all this seems eerily familiar to NSLinguisticTagger, you're spot on: these two are almost identical, although Natural Language has a much nicer API for Swift users.

Small extras

Those are the main features, but a few more stick out as being interesting.

-

UIWebViewis deprecated for real now. It was marked as "Legacy" in Xcode 9.3, but is now formally deprecated. You should useWKWebViewinstead – perhaps start with my ultimate guide to WKWebView. -

There is now

UIUserInterfaceStyle.darkfor when our iOS app is in dark mode. This cannot currently be activated on an iPhone, but the rest of the infrastructure is there – you can load dark mode images, read UI changes when dark mode is activated, and so on. -

Any text input files now support password rules to the system generate better, stronger passwords. This isn't working too well in the current beta, but that should hopefully change soon.

-

The

UIImagePNGRepresentation()andUIImageJPEGRepresentation()functions have been replaced with.pngData()and.jpegData(compressionQuality:)methods.

TAKE YOUR SKILLS TO THE NEXT LEVEL If you like Hacking with Swift, you'll love Hacking with Swift+ – it's my premium service where you can learn advanced Swift and SwiftUI, functional programming, algorithms, and more. Plus it comes with stacks of benefits, including monthly live streams, downloadable projects, a 20% discount on all books, and free gifts!

Sponsor Hacking with Swift and reach the world's largest Swift community!