What's new in iOS 11.1 for developers

After the behemoth of iOS 11, this is just a patch release.

iOS 11 introduced a huge range of changes that are already revolutionizing app development, not least Core ML, ARKit, drag and drop, and more. Following that epic release, the first beta of iOS 11.1 was released today and so far mainly seems to be a few small tweaks to APIs that got missed off the initial iOS 11.0 release.

Note: iOS 11.1 is in early beta, and may continue to add features before reaching final release; this article will be updated as necessary.

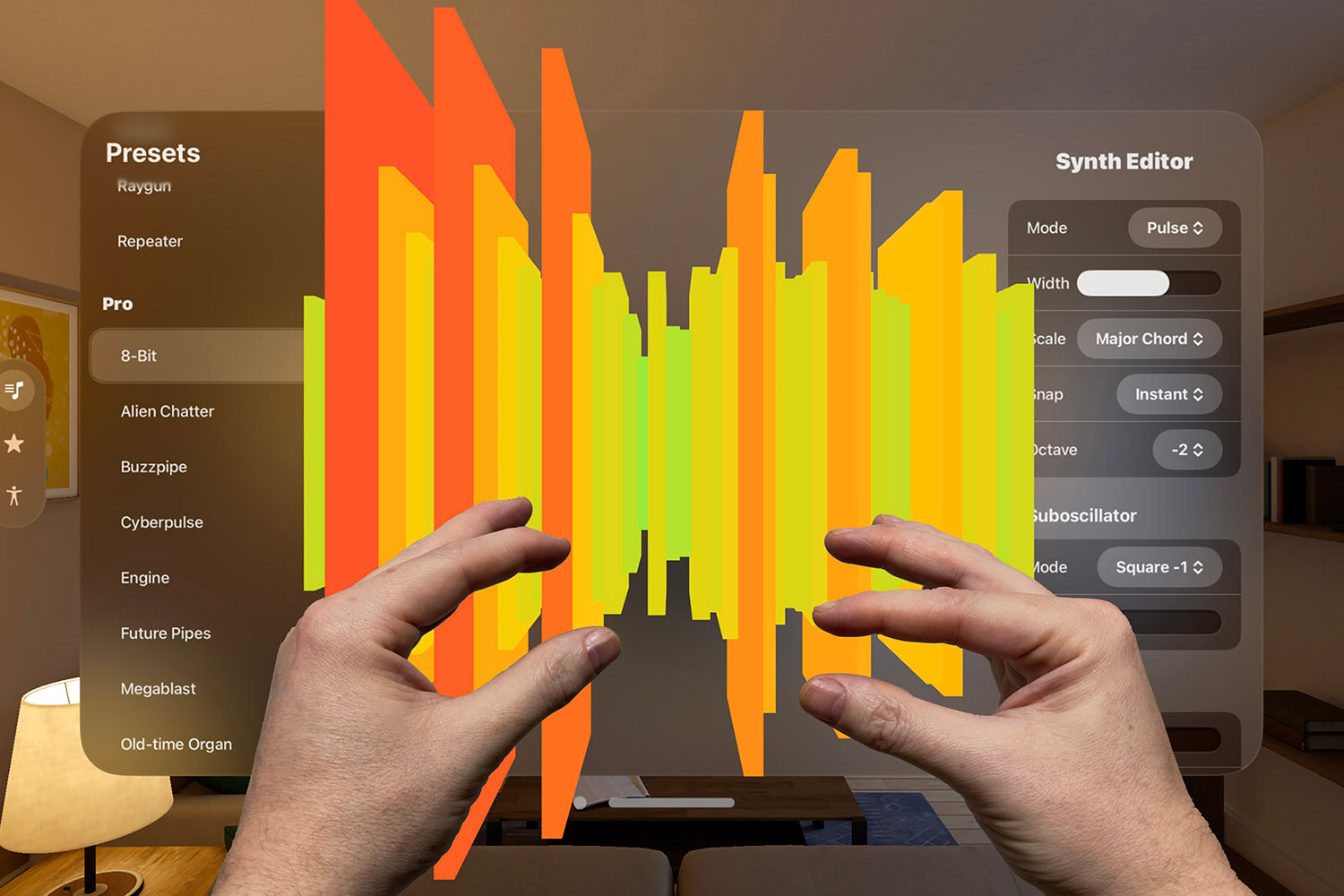

There are two new APIs you should be aware of, one for activating the True Depth camera built into the new iPhone X, and one for detecting system pressure when capturing media. Both of these have been retrospectively marked @available(iOS 11.0, *), which means they shipped with iOS 11 but weren't made available until now.

First, the new True Depth camera. Here's how the Apple SDK describes it:

A device that consists of two cameras, one YUV and one Infrared. The infrared camera provides high quality depth information that is synchronized and perspective corrected to frames produced by the YUV camera. While the resolution of the depth data and YUV frames may differ, their field of view and aspect ratio always match.

You're probably already using the AVCaptureDevice.default() method for gaining access to media capture devices, and the True Depth camera fits right into that. Here's an example to get you started:

let trueDepthCamera = AVCaptureDevice.default(.builtInTrueDepthCamera, for: .video, position: .front)As for the new system pressure system, this looks like it's designed to work around hardware limitations – if a device is unable to continue capturing media at the rate requested by a developer, the system pressure system is designed to interrupt the capture session until things calm down a little.

You can check the system pressure for your capture device by reading its new systemPressureState property. Here's how the iOS 11.1 SDK describes it:

This property indicates whether the capture device is currently subject to an elevated system pressure condition. When system pressure reaches

AVCaptureSystemPressureLevelShutdown, the capture device cannot continue to provide input, so theAVCaptureSessionbecomes interrupted until the pressured state abates. System pressure can be effectively mitigated by lowering the device'sactiveVideoMinFrameDurationin response to changes in thesystemPressureState. Clients are encouraged to implement frame rate throttling to bring system pressure down if their capture use case can tolerate a reduced frame rate.

So, you might write code like this:

if trueDepth?.systemPressureState.level == .critical {

// take emergency action to avoid shutdown

}This property is KVO-compliant, so you could also monitor it passively.

Where things get interesting is why there's system pressure. The SDK provides three reasons, all stored in the systemPressureState.factors property:

.systemTemperature: "Indicates that the entire system is currently experiencing an elevated thermal level.".peakPower: "Indicates that the system's peak power requirements exceed the battery's current capacity and may result in a system power off.".depthModuleTemperature: "Indicates that the module capturing depth information is operating at an elevated temperature. As system pressure increases, depth quality may become degraded."

We've all experienced an "elevated thermal level" before, because it's marketing speak for "damn my iPhone is almost burning my hand," but the other two are new. If the .peakPower factor is triggered it literally means the system is using so much power that the battery can't supply it all, and the system may shutdown. .depthModuleTemperature is also interesting because it tells us that the depth capturing system will gracefully degrade if it starts overheating – it will be interesting to see what effect that has on Face ID.

The beta also includes other non-API changes, although these are less tangible because they aren't API code we can see in Xcode. Here's what UIKit engineer Tyler Fox said on Twitter:

There are some important changes and fixes from 11.0, and the 11.1 simulator most closely reflects what your app will do on actual hardware.

— Tyler Fox (@smileyborg) September 27, 2017

So, iOS 11.1 is shaping up to be a quiet release, and that's no bad thing – it will take a few months for developers to catch up with everything new in the main iOS 11 release, so a little bit of slack and tweaking is quite welcome.

SPONSORED Join a FREE crash course for mid/senior iOS devs who want to achieve an expert level of technical and practical skills – it’s the fast track to being a complete senior developer! Hurry up because it'll be available only until April 28th.

Sponsor Hacking with Swift and reach the world's largest Swift community!